If you've been dealing with data in the last few years, you've inevitably also come across the concept of Big Data and NoSQL databases. What do these terms mean for data quality efforts? I believe they can have a great impact. But I also think they are difficult concepts to juggle because the understanding of them varies a lot.

For the purpose of this writing, I will simply say that in my opinion Big Data and NoSQL exposes us as data workers to two new general challenges:

For the purpose of this writing, I will simply say that in my opinion Big Data and NoSQL exposes us as data workers to two new general challenges:

- Much more data than we're used to working with, leading to more computing time and new techniques for even handling those amounts of data.

- More complex data structures that are not nescesarily based on a relational way of thinking.

So who will be exposed to these challenges? As a developer of tools I am definately be exposed to both challenges. But will the end-user be challenged by both of them? I hope not. But people need good tools in order to do clever things with data quality.

The more data challenge is primarily one of storage and performance. And since storage isn't that expensive, I mostly concern it to be a performance challenge. And for the sake of argument, let's just assume that the tool vendors should be the ones mostly concerned about performance - if the tools and solutions are well designed by the vendors, then at least most of the performance issues should be tackled here.

But the complex data structures challenge is a different one. It has surfaced for a lot of reasons, including:

- Favoring performance by eg. elminating the need to join database tables.

- Favoring ease of use by allowing logical grouping of related data even though granularity might differ (eg. storing orderlines together with the order itself).

- Favoring flexibility by not having a strict schema to perform validation upon inserting.

Typically the structure of such records isn't tabular. Instead it is often based on key/value maps, like this:

{

"orderNumber": 123,

"priceSum": 500,

"orderLines": [

{ "productId": "abc", "productName": "Foo", "price": 200 },

{ "productId": "def", "productName": "Bar", "price": 300 },

],

"customer": { "id": "xyz", "name": "John Doe", "country": "USA" }

}

Looking at this you will notice some data structure features which are alien to the relational world:

- The orderlines and customer information is contained within the same record as the order itself. In other words: We have data types like arrays/lists (the "orderLines" field) and key/value maps (each orderline element, and the "customer" field).

- Each orderline have a "productId" field, which probably refers to a detailed product record. But it also contains a "productName" field since this information is probably often the most wanted information when presenting/reading the record. In other words: Redundancy is added for ease of use and for performance.

- The "priceSum" field is also redundant, since we could easily deduct it by visiting all orderlines and summing them. But redundancy is also added for performance in this case.

- Some of the same principles apply to the "customer" field: It is a key/value type, and it contains both an ID as well as some redundant information about the customer.

Support for such data structures is traditionally difficult for database tools. As tool producers, we're used to dealing with relational data, joins and these things. But in the world of Big Data and NoSQL we're up against challenges of complex data structures which includes traversal and selection within the same record.

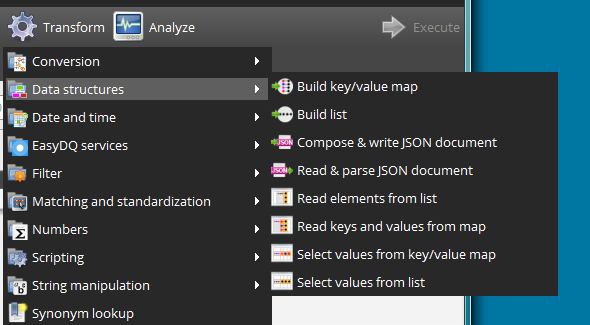

In DataCleaner, these issues are primarily handled using the "Data structures" transformations, which I feel I need to give a little attention:

Using these transformations you can both read and write the complex data structures that we see in Big Data projects. To explain the 8 "Data structures" transformations, I will try to define a few terms used in their naming:

- To "Read" a data structure such as a list or a key/value map means to view each element in the structure as a separate record. This enables you to profile eg. all the orderlines in the order-record example.

- To "Select" from a data structure such as a list or a key/value map means to retreive specific elements while retaining the current record granularity. This enables you to profile eg. the customer names of your orders.

- Similarly you can "Build" these data structures. The record granularity of the built data structures is the same as the current granularity in your data processing flow.

- And lastly I should mention JSON because it is in the screenshot. JSON is probably the most common format of literal representing data structures like these. And in deed the example above is written in JSON format. Obviously we support reading and writing JSON objects directly in DataCleaner.

Using these transformations we can overcome the challenge of handling the new data structures in Big Data and NoSQL.

Another important aspect that comes up whenever there's a whole new generation of databases is that the way you interface them is different. In DataCleaner (or rather, in DataCleaner's little-sister project MetaModel) we've done a lot of work to make sure that the abstractions already known can be reused. This means that querying and inserting records into a NoSQL database works exactly the same as with a relational database. The only difference of course is that new data types may be available, but that builds nicely on top of the existing relational concepts of schemas, tables, columns etc. And at the same time we do all we can to optimize performance so that querying techniques is not up to the end-user but will be applied according to the situation. Maybe I'll try and explain how that works under the covers some other time!