As a follow-up to my previous post about how we make DataCleaner super-fast by applying some nice multi-threading tricks, The DataCleaner engine explained, I would now like to touch upon another performance booster: Push down query optimization.

To my knowledge "push down query optimization" is a trick that only very few tools support, since it requires a flow model that was actually built for it. The idea is that by inspecting an execution flow the tool might be able to identify steps in the beginning or in the end of the flow that can be replaced by query modifications.

For example, if your data flow begins with a filtering action that removes all records of a given type or restricts the further processing to only be the first 1000 records or something like that. Most tools simply require you to write some SQL yourself, which is also doable, but as I've said before on this blog, I think writing SQL is a barrier to productivity, creativity and good data quality results. So in DataCleaner we do not offer this option, because we have something that is much, much nicer. That solution is push down query optimization!

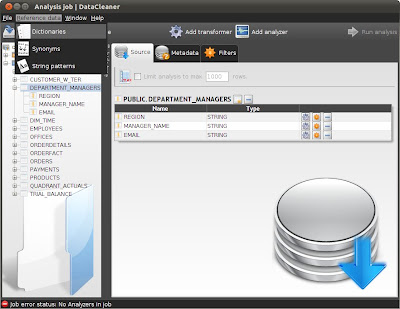

Let me illustrate. I will be using the Sakila example database for MySQL:

Say you want to do a simple pattern finding of film titles in the Sakila database, you would select the title column and you would get a result like this:

In the DataCleaner logs we can see what queries are actually fired to the database. Open up the log file (in the logs folder) and inspect datacleaner.log. You will find a line like this:

Executing query: SELECT `nicer_but_slower_film_list`.`title` FROM sakila.`nicer_but_slower_film_list`

That's fine. You can inspect the results closer, but that's not what this topic is about, so I'll carry on... Now let's say you want to refine your job. Let's instead see how the pattern distribution is if we want to only look at a few categories of films. So I add a 'Equals' filter to only select horror, sports and action movies and apply it to my pattern finder:

If we run the job, and inspect the log file again, we see now this entry:

Executing query: SELECT `nicer_but_slower_film_list`.`title`, `nicer_but_slower_film_list`.`category` FROM sakila.`nicer_but_slower_film_list` WHERE (`nicer_but_slower_film_list`.`category` = 'Horror' OR `nicer_but_slower_film_list`.`category` = 'Action' OR `nicer_but_slower_film_list`.`category` = 'Sports')

What's surprising here is that the filter actually got query optimized. Not all filters have this ability, since some of them have richer functionality than can be expressed as a query modification. But some of them do, and typically these are the small functions that make a big difference.

Let's also apply a Max rows filter that limits the analysis for only 20 records and chain it so that it depends on the Equals filter:

If we now run the job, both filters will have been applied to the query:

Executing query: SELECT `nicer_but_slower_film_list`.`title`, `nicer_but_slower_film_list`.`category` FROM sakila.`nicer_but_slower_film_list` WHERE (`nicer_but_slower_film_list`.`category` = 'Horror' OR `nicer_but_slower_film_list`.`category` = 'Action' OR `nicer_but_slower_film_list`.`category` = 'Sports') LIMIT 20

That means that we do as much as we can to optimize the query, without ever having to ask the user to help us. So if you modify the logical job, the physical queries are automatically adapted! This is why push down query optimization is a superior optimization technique to raw SQL. Happy data cleaning!

Additional information for developers: If you're developing plugins to DataCleaner and want to make a query optimized filter, then simply make sure you implement the QueryOptimizedFilter interface! Happy coding!

In modern software development

In modern software development