First step: You have an idea for your extension. My idea was to get the Groovy language integrated with DataCleaner, to offer an advanced scripting language option, similar to the existing JavaScript transformer - just a lot more powerful. The task would give me the chance to 1) get acquainted with the Groovy language, 2) solve some of the more advanced uses of DataCleaner by giving a completely open-ended scripting option and 3) blog about it. The third point is important to me, because we right now have a Community Contributor Contest, and I'd like to invite extension developers to participate.

Second step: Build a quick prototype. This usually starts by identifying which type of component(s) you want to create. In my case it was a transformer, but in some cases it might be an analyzer. The choice between these are essentially: Does your extension pre-process or transform the data in a way that it should become a part of a flow of operations? Then it's a Transformer. Or is it something that will consume the records (potentially after being pre-processed) and generate some kind of analysis result or write the records somewhere? Then it's a Analyzer.

The API for DataCleaner was designed to be very easy to use. The ideom has been: 1) The obligatory functionality is provided in the interface that you implement. 2) The user-configured parts are injected using the @Configured annotation. 3) The optional parts can be injected if you need them. In other words, this is very much inspired by the idea of Convention-over-Configuration.

So, I wanted to build a Transformer. This was my first prototype, which I could hitch together quite quickly after reading the Embedding Groovy documentation and just implementing the Transformer interface revealed what I needed to provide for DataCleaner to operate:

@TransformerBean("Groovy transformer (simple)")

public class GroovySimpleTransformer implements Transformer {

@Configured

InputColumn[] inputs;

@Configured

String code;

private GroovyObject _groovyObject;

public OutputColumns getOutputColumns() {

return new OutputColumns("Groovy output");

}

public String[] transform(InputRow inputRow) {

if (_groovyObject == null) {

_groovyObject = compileCode();

}

final Map map = new LinkedHashMap();

for (InputColumn input : inputs) {

map.put(input.getName(), inputRow.getValue(input));

}

final Object[] args = new Object[] { map };

String result = (String) _groovyObject.invokeMethod("transform", args);

logger.debug("Transformation result: {}", result);

return new String[] { result };

}

private GroovyObject compileCode() {

// omitted

}

public class GroovySimpleTransformerTest extends TestCase {

public void testScenario() throws Exception {

GroovySimpleTransformer transformer = new GroovySimpleTransformer();

InputColumn col1 = new MockInputColumn("foo");

InputColumn col2 = new MockInputColumn("bar");

transformer.inputs = new InputColumn[] { col1, col2 };

transformer.code =

"class Transformer {\n" +

" String transform(map){println(map); return \"hello \" + map.get(\"foo\")}\n" +

"}";

String[] result = transformer.transform(new MockInputRow().put(col1, "Kasper").put(col2, "S"));

assertEquals(1, result.length);

assertEquals("hello Kasper", result[0]);

}

}

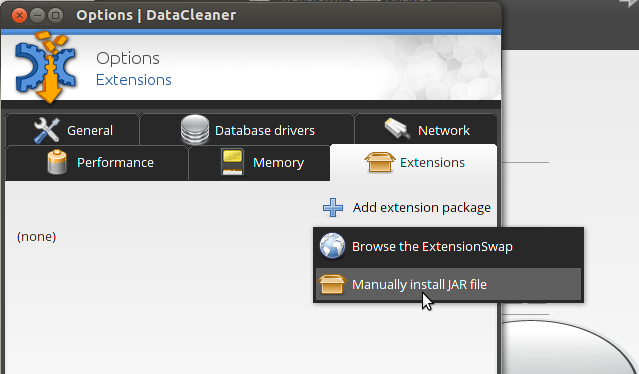

Fourth step: Do the polishing that makes it look and feel like a usable component. It's time to build the extension and see how it works in DataCleaner. When the extension is bundled in a JAR file, you can simply click Window -> Options, select the Extensions tab and click Add extension package -> Manually install JAR file:

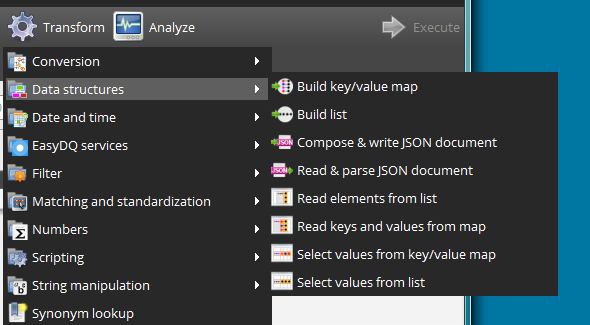

After registering your extension you will be able to find it in DataCleaner's Transformation menu (or if you built an Analyzer, in the Analyze menu).

In my case I discovered several sub-optimal features of my extensions. Here's a list of them, and how I solved it:

| What? | How? |

|---|---|

| My transformer had only a default icon | Icons can be defined by providing PNG icon (32x32 pixels) with the same name as the transformer class, in the JAR file. In my case the transformer class was GroovySimpleTransformer.java, so I made an icon available at GroovySimpleTransformer.png. |

| The 'Code' text field was a single line field and did not look like a code editing field. | Since the API is designed for Convention-over-Configuration, putting a plain String property as the groovy code was maybe a bit naive. There are two strategies to pursue if you have properties which need special rendering on the UI: Provide more metadata about the property (Quite easy), or build your own renderer for it (most flexible, but also more complex). In this case I was able to simply provide more metadata, using the @StringProperty annotation:

@Configured @StringProperty(multiline = true, mimeType = "text/groovy") String codeThe default DataCleaner string property widget will then provide a multi-line text field with syntax coloring for the specific mime-type: |

| The compilation of the Groovy class was done when the first record hits the transformer, but ideally we would want to do it before the batch even begins. | This point is actually quite important, also to avoid race-conditions in concurrent code and other nasty scenarios. Additionally it will help DataCleaner validation the configuration before actually kicking off a the batch job. The trick is to add a method with the @Initialize annotation. If you have multiple items you need to initialize, you can even add more. In our case, it was quite simple: @Initialize

public void init() {

_groovyObject = compileCode();

}

|

| The transformer was placed in the root of the Transformation menu. | This was fixed by applying the following annotation on the class, moving it into the Scripting category:

@Categorized(ScriptingCategory.class) |

| The transformer had no description text while hovering over it. | The description was added in a similar fashion, with a class-level annotation:

@Description("Perform a data transformation with the use of the Groovy language.")

|

| After execution it would be good to clean up resources used by Groovy. | Similarly to the @Initialize annotation, I can also create one or more descruction methods, annotated with @Close. In the case of the Groovy transformer, there are some classloader-related items that can be cleared after execution this way. |

| In a more advanced edition of the same transformer, I wanted to support multiple output records. | DataCleaner does support transformers that yield multiple (or even zero) output records. To archieve this, you can inject an OutputRowCollector instance into the Transformer:

public class MyTransformer implements Transformer<...> {

@Inject

@Provided

OutputRowCollector collector;

public void transform(InputRow row) {

// output two records, each with two new values

collector.putValues("foo", "bar");

collector.putValues("hello", "world");

}

}

Side-note - Users of Hadoop might recognize the OutputRowCollector as similar to mappers in Map-Reduce. Transformers, like mappers, are in deed quite capable of executing in parallel. |

Fifth step: When you're satisfied with the extension, Publish it on the ExtensionSwap. Simply click the "Register extension" button and follow the instructions on the form. Your extension will now be available to everyone and make others in the community happy!

I hope you found this blog useful as a way to get into DataCleaner extension development. I would be interested in any kind of comment regarding the extension mechanism in DataCleaner - please speak up and let me know what you think!