Earlier I've promised you some "Big news" today and here it is... Hmm, where to start, so much to say.

OK, let's start with the software, after all that's what I think most of my blog readers care most about:

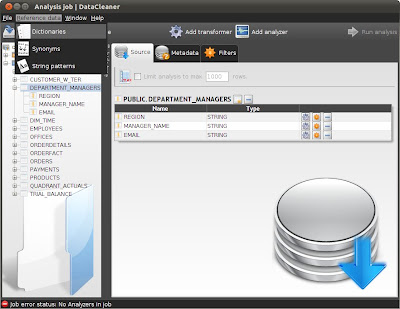

DataCleaner 2.0 was released!

To me this is the biggest news for the DataCleaner community in a LONG time. DataCleaner 2.0 is a major release that I and my new employer (read more below) have put a lot of effort into. I just had a look at some source code statistics and actually the 2.0 release is larger (in terms of lines of code, source code commits, contributions etc.) than all previous DataCleaner releases together. I don't want to say a lot about the new functionality here, because it's all presented quite well at the

DataCleaner website.

More information:

Watch out, dirty data! DataCleaner 2.0 is in town!

MetaModel 1.5 was released!

My other lovechild,

MetaModel, have also just been released in a version 1.5! MetaModel 1.5 is also a quite big improvement on the previous 1.2 version. The release contains a lot of exciting new features for doing querying and datastore exploration as well as a lot of maturity bugfixes.

More information:

What's new in MetaModel 1.5?

And then let's move on to a major announcement that I definately think will affect the eobjects.org community positively:

Human Inference acquires eobjects.org

This might come as a surprise to quite a lot of you, so let me explain a bit. For some years DataCleaner and the other eobjects.org projects have been independent open source projects that I've invested a lot of time in. The projects have grown nicely in terms of users and the ideas have been manyfold. My ambitions for the projects have always been high, but they suffered from the fact that I was mostly working on them in my free time. One of the many fun things about doing these projects was that I've gotten to meet up with a lot of exciting people that thought my projects where interesting. At one time I met some people from the data quality vendor

Human Inference, who thought DataCleaner was great and they wanted to know if they could in some way use it in collaboration with their commercial offerings. From my end of the table I was on the other hand thinking that their products offered some features that would be an excellent addition to DataCleaner's functionality. So what we did was a deal to try and raise the value for both parties. And with that in mind, here's the press release about it:

More information:

Human Inference completes acquisition of DataCleaner and eobjects.org

I have a new job

I now work with Human Inference to actively grow the DataCleaner project, MetaModel as well as Human Inference's commercial products. We're building really exciting cloud-based data quality services that I think will complement the open source offering nicely. Of course it's not all going to be free, but I promise that even for the users who don't want to pay for the additional services, the acquisition and my new job will be beneficial anyway, because we're adding a lot of new resources to the projects that are improving on both the open source parts and the commercial plug-ins.

And in the end I just also want to mention that Data Quality Pro has a great article about a lot of these news, including an interview with me and Sabine Palinckx, the CEO of Human Inference.

More information:

Open Source DataCleaner gets a major update, Human Inference enters the Open Source Data Quality Market